Dynamic feature extraction for flotation froth based on centroid-convex hull-adaptive clustering

-

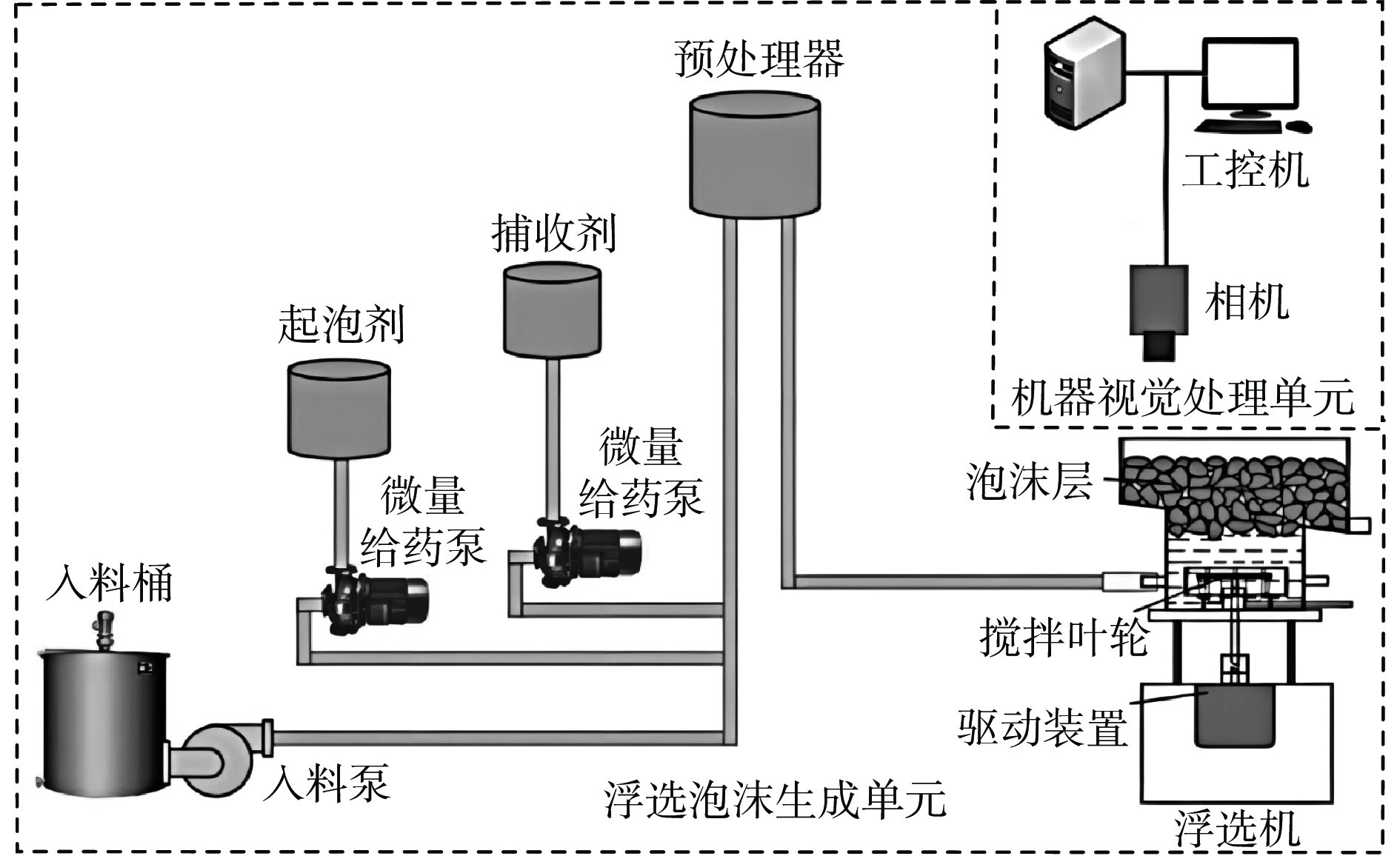

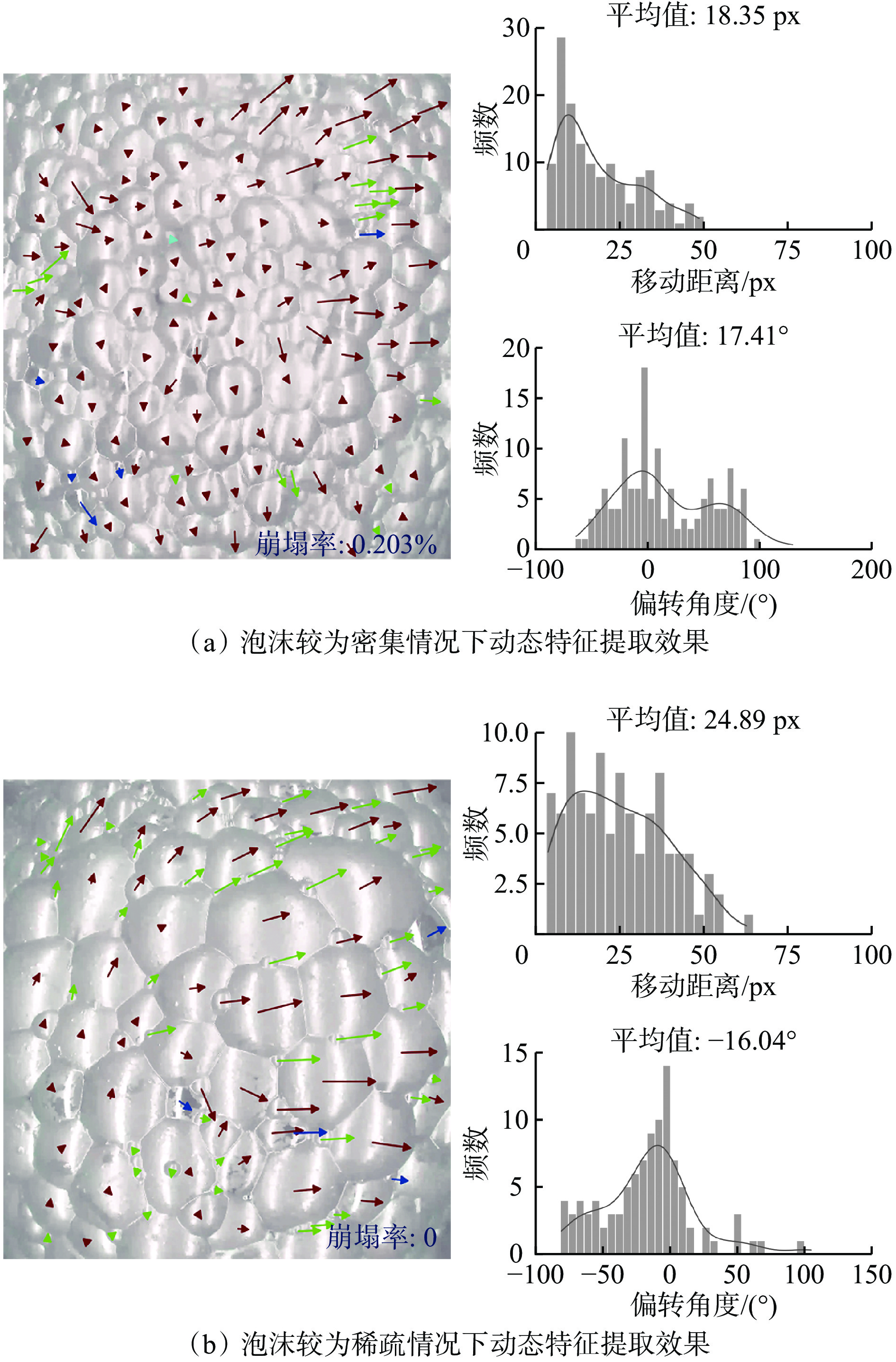

摘要: 面对复杂的浮选现场环境及浮选泡沫自身相互粘连导致的边界不清等情况,现有泡沫动态特征(流动速度和崩塌率)提取方法往往无法准确划定属于每个泡沫的动态特征采样区域、不能全面匹配相邻帧间的特征点对且难以有效识别崩塌区域。针对上述问题,提出了一种基于质心−凸包−自适应聚类法的浮选泡沫动态特征提取方法。该方法采用集成Swin−Transformer多尺度特征提取能力的改进型Mask2Former,实现对泡沫质心的精准定位和崩塌区域的有效识别;通过最优凸包评价函数搜寻目标泡沫周围相邻一圈泡沫质心构建的凸包,拟合出接近实际泡沫轮廓的动态特征采样区域;运用基于Transformer的局部图像特征匹配(LoFTR)算法匹配相邻帧图像间的特征点对;针对动态特征采样区域内部的所有特征点对,通过基于OPTICS算法的主特征自适应聚类法提取每个泡沫的主要流动速度。实验结果表明,在普通泡沫质心定位和崩塌区域识别任务中,该方法分别取得了88.83%,97.92%的准确率及77.90%,96.52%的交并比;以2.69%的平均剔除率实现了99.93%的特征点对匹配正确率;在多种工况下均能有效划定与实际泡沫边界相近的特征采样区域,进而定量提取每个泡沫的动态特征。Abstract: In the face of complex flotation site environments and issues such as unclear boundaries caused by the mutual adhesion of flotation froth, existing methods for extracting dynamic features (such as flow velocity and collapse rate) often fail to accurately delineate the dynamic feature sampling regions corresponding to each froth, cannot comprehensively match feature points between adjacent frames, and have difficulty effectively identifying collapse regions. To address these problems, a dynamic feature extraction method for flotation froth based on a centroid-convex hull-adaptive clustering approach is proposed. This method employs an improved Mask2Former, integrated with the multi-scale feature extraction capability of Swin-Transformer, to accurately locate froth centroids and effectively identify collapse regions. An optimal convex hull evaluation function is used to search for the convex hull formed by the centroids of adjacent froth surrounding the target froth, thereby fitting a dynamic feature sampling region close to the actual froth contour. The local feature matching with transformer (LoFTR) algorithm is applied to match feature point pairs between adjacent frames. For all feature point pairs within the dynamic feature sampling region, the main flow velocity of each froth is extracted using the main feature adaptive clustering method based on the OPTICS algorithm. Experimental results show that this method achieves accuracy rates of 88.83% and 97.92% and intersection over union (IoU) rates of 77.90% and 96.52% in ordinary froth centroid location and collapse region identification tasks, respectively. It also achieves a correct feature point pair matching rate of 99.93% with an average exclusion rate of 2.69%. The method effectively delineates feature sampling regions close to the actual froth boundaries under various conditions, enabling the quantitative extraction of each froth's dynamic features.

-

-

表 1 不同算法在质心分类定位与崩塌区域识别任务的性能对比

Table 1 Performance comparison of different algorithms in centroid classification positioning and collapse region identification tasks

% 算法 普通泡沫质心 较小泡沫质心 不成泡区域 崩塌区域 交并比 准确率 交并比 准确率 交并比 准确率 交并比 准确率 OCRNet 61.02 67.36 45.92 54.07 87.22 89.81 84.58 87.59 PSPNet 72.46 82.16 54.19 64.32 91.41 95.42 87.27 91.69 DeepLabV3+ 71.22 81.07 55.33 69.89 90.20 94.09 88.05 93.19 CCNet 60.71 73.56 34.44 44.39 73.80 74.89 72.14 76.68 Segmenter 66.27 77.66 59.24 73.15 84.89 93.43 79.93 95.19 SegFormer 62.03 72.58 42.66 51.70 88.94 95.16 87.87 92.63 改进型Mask2Former 77.90 88.83 69.65 87.78 96.68 97.11 96.52 97.92 表 2 不同算法下特征点对提取结果对比

Table 2 Comparison of extraction results of feature point pairs under different algorithms

算法 平均特征点检测数/个

(±标准差)平均错误点剔除数/个

(±标准差)平均剔除率/% 平均特征点最终匹配数/个

(±标准差)平均匹配正确率/%

(±标准差)SIFT+FLANN 1 669.37

(±303.41)803.12

(±214.20)48.11 866.25

(±355.23)98.35

(±0.47)SIFT+BF 461.63

(±82.65)27.65 1 207.75

(±278.69)78.80

(±10.11)SIFT+RANSAC 830.75

(±250.49)49.76 838.63

(±378.71)98.93

(±0.37)SURF+FLANN 2 131.88

(±166.28)990.38

(±278.63)46.46 1 141.5

(±373.15)99.40

(±0.44)SURF+BF 601.50

(±125.63)28.21 1 530.38

(±227.45)85.08

(±9.32)SURF+RANSAC 1049.75

(±273.11)49.24 1 082.12

(±376.68)99.02

(±0.36)AKAZE+BF 1 279.75

(±260.92)236.63

(±101.56)18.49 1 043.125

(±247.66)92.90

(±6.58)AKAZE+ORB 221.88

(±103.37)17.34 1 057.88

(±252.56)94.81

(±4.88)AKAZE+GMS 354.75

(±173.71)27.72 925.0

(±282.74)99.85

(±0.21)LoFTR 3 510.88

(±118.99)94.37

(±62.04)2.69 3 416.50

(±277.95)99.93

(±0.33) -

[1] 王然风,高建川,付翔. 智能化选煤厂架构及关键技术[J]. 工矿自动化,2019,45(7):28-32. WANG Ranfeng,GAO Jianchuan,FU Xiang. Framework and key technologies of intelligent coal preparation plant[J]. Industry and Mine Automation,2019,45(7):28-32.

[2] POPLI K,SEKHAVAT M,AFACAN A,et al. Dynamic modeling and real-time monitoring of froth flotation[J]. Minerals,2015,5(3):570-591. DOI: 10.3390/min5030510

[3] 赵洪伟,谢永芳,蒋朝辉,等. 基于泡沫图像特征的浮选槽液位智能优化设定方法[J]. 自动化学报,2014,40(6):1086-1097. ZHAO Hongwei,XIE Yongfang,JIANG Zhaohui,et al. An intelligent optimal setting approach based on froth features for level of flotation cells[J]. Acta Automatica Sinica,2014,40(6):1086-1097.

[4] MOOLMAN D W,EKSTEEN J J,ALDRICH C,et al. The significance of flotation froth appearance for machine vision control[J]. International Journal of Mineral Processing,1996,48(3/4):135-158.

[5] NEETHLING S J,BRITO-PARADA P R. Predicting flotation behaviour-the interaction between froth stability and performance[J]. Minerals Engineering,2018,120:60-65. DOI: 10.1016/j.mineng.2018.02.002

[6] ALDRICH C,MARAIS C,SHEAN B J,et al. Online monitoring and control of froth flotation systems with machine vision:a review[J]. International Journal of Mineral Processing,2010,96(1/2/3/4):1-13.

[7] 阳春华,杨尽英,牟学民,等. 基于聚类预分割和高低精度距离重构的彩色浮选泡沫图像分割[J]. 电子与信息学报,2008,30(6):1286-1290. YANG Chunhua,YANG Jinying,MU Xuemin,et al. A segmentation method based on clustering pre-segmentation and high-low scale distance reconstruction for colour froth image[J]. Journal of Electronics & Information Technology,2008,30(6):1286-1290.

[8] 李建奇. 矿物浮选泡沫图像增强与分割方法研究及应用[D]. 长沙:中南大学,2013. LI Jianqi. Froth image enhancement and segmentation method and its application for mineral flotation[D]. Changsha:Central South University,2013.

[9] 唐朝晖,郭俊岑,张虎,等. 基于改进I−Attention U−Net的锌浮选泡沫图像分割算法[J]. 湖南大学学报(自然科学版),2023,50(2):12-22. TANG Zhaohui,GUO Juncen,ZHANG Hu,et al. Froth image segmentation algorithm based on improved I-Attention U-Net for zinc flotation[J]. Journal of Hunan University (Natural Sciences),2023,50(2):12-22.

[10] 牟学民,刘金平,桂卫华,等. 基于SIFT特征配准的浮选泡沫移动速度提取与分析[J]. 信息与控制,2011,40(4):525-531. MU Xuemin,LIU Jinping,GUI Weihua,et al. Flotation froth motion velocity extraction and analysis based on SIFT features registration[J]. Information and Control,2011,40(4):525-531.

[11] 郭中天,王然风,付翔,等. 基于图像特征匹配的煤泥浮选泡沫速度特征提取方法[J]. 工矿自动化,2022,48(10):34-39,54. GUO Zhongtian,WANG Ranfeng,FU Xiang,et al. Method for extracting froth velocity of coal slime flotation based on image feature matching[J]. Journal of Mine Automation,2022,48(10):34-39,54.

[12] 唐朝晖,刘金平,桂卫华,等. 基于数字图像处理的浮选泡沫速度特征提取及分析[J]. 中南大学学报(自然科学版),2009,40(6):1616-1622. TANG Zhaohui,LIU Jinping,GUI Weihua,et al. Froth bubbles speed characteristic extraction and analysis based on digital image processing[J]. Journal of Central South University (Science and Technology),2009,40(6):1616-1622.

[13] JAHEDSARAVANI A,MARHABAN M H,MASSINAEI M. Prediction of the metallurgical performances of a batch flotation system by image analysis and neural networks[J]. Minerals Engineering,2014,69:137-145. DOI: 10.1016/j.mineng.2014.08.003

[14] MORAR S H,BRADSHAW D J,HARRIS M C. The use of the froth surface lamellae burst rate as a flotation froth stability measurement[J]. Minerals Engineering,2012,36:152-159.

[15] LIU Ze,LIN Yutong,CAO Yue,et al. Swin transformer:hierarchical vision transformer using shifted windows[C]. IEEE/CVF International Conference on Computer Vision,Montreal,2021:10012-10022.

[16] CHENG Bowen,MISRA I,SCHWING A G,et al. Masked-attention mask transformer for universal image segmentation[C]. IEEE/CVF Conference on Computer Vision and Pattern Recognition,New Orleans,2022:1290-1299.

[17] KIRILLOV A,WU Yuxin,HE Kaiming,et al. PointRend:image segmentation as rendering[C]. IEEE/CVF Conference on Computer Vision and Pattern Recognition,Seattle,2020:9796-9805.

[18] SUN Jiaming,SHEN Zehong,WANG Yu'ang,et al. LoFTR:detector-free local feature matching with transformers[C]. IEEE/CVF Conference on Computer Vision and Pattern Recognition,Nashville,2021:8918-8927.

[19] ANKERST M,BREUNIG M M,KRIEGEL H P,et al. OPTICS:ordering points to identify the clustering structure[J]. ACM Sigmod Record,1999,28(2):49-60. DOI: 10.1145/304181.304187

[20] YUAN Yuhui,CHEN Xilin,WANG Jingdong. Object-contextual representations for semantic segmentation[C]. European Conference on Computer Vision,Glasgow,2020:173-190.

[21] ZHAO Hengshuang,SHI Jianping,QI Xiaojuan,et al. Pyramid scene parsing network[C]. IEEE Conference on Computer Vision and Pattern Recognition,Honolulu,2017:6230-6239.

[22] CHEN L C,ZHU Yukun,PAPANDREOU G,et al. Encoder-decoder with atrous separable convolution for semantic image segmentation[C]. European Conference on Computer Vision,Munich,2018:801-818.

[23] HUANG Zilong,WANG Xinggang,HUANG Lichao,et al. CCNet:criss-cross attention for semantic segmentation[C]. IEEE/CVF International Conference on Computer Vision,Seoul,2019:603-612.

[24] STRUDEL R,GARCIA R,LAPTEV I,et al. Segmenter:transformer for semantic segmentation[C]. IEEE/CVF International Conference on Computer Vision,Montreal,2021:7242-7252.

[25] XIE Enze,WANG Wenhai,YU Zhiding,et al. SegFormer:simple and efficient design for semantic segmentation with transformers[C]. Conference and Workshop on Neural Information Processing Systems,2021:12077-12090.

[26] LOWE D G. Distinctive image features from scale-invariant keypoints[J]. International Journal of Computer Vision,2004,60(2):91-110. DOI: 10.1023/B:VISI.0000029664.99615.94

[27] BAY H,ESS A,TUYTELAARS T,et al. Speeded-up robust features (SURF)[J]. Computer Vision and Image Understanding,2008,110(3):346-359. DOI: 10.1016/j.cviu.2007.09.014

[28] MUJA M,LOWE D G. Fast approximate nearest neighbors with automatic algorithm configuration[C]. The 4th International Conference on Computer Vision Theory and Applications,Lisboa,2009:331-340.

[29] OI L,LIU Wei,LIU Ding. ORB-based fast anti-viewing image feature matching algorithm[C]. Chinese Automation Congress,Xi'an,2018:2402-2406.

[30] FISCHLER M A,BOLLES R C. Random sample consensus:a paradigm for model fitting with applications to image analysis and automated cartography[J]. Communications of the ACM,1981,24(6):381-395. DOI: 10.1145/358669.358692

[31] RUBLEE E,RABAUD V,KONOLIGE K,et al. ORB:an efficient alternative to SIFT or SURF[C]. International Conference on Computer Vision,Barcelona,2011:2564-2571.

[32] BIAN Jiawang,LIN Wenyan,LIU Yun,et al. GMS:grid-based motion statistics for fast,ultra-robust feature correspondence[J]. International Journal of Computer Vision,2020,128(6):1580-1593. DOI: 10.1007/s11263-019-01280-3

[33] MICHAELIS C,MITZKUS B,GEIRHOS R,et al. Benchmarking robustness in object detection:autonomous driving when winter is coming[EB/OL]. [2024-02-25]. https://arxiv.org/abs/1907.07484v2.

下载:

下载: